Long Short Term Memory.

Use previous seven days close price to forecast today’s close price of the stock

(*This is my favorite model, it performs well)

LSTMs(Long Short Term Memory) is a special type of RNN(Recurrent Neural Networks)

The idea of LSTMs is that there are some problems of long-term dependencies of RNN.

Multiply many small members together ->

Errors due to further back time steps have smaller and smaller gradients ->

Bias parameters to capture short-term dependencies.

To handling the Vanishing Gradients problem, we could use

Activation Function

Parameter Initialization

Gated Cells (LSTMs)

Key concepts

Maintain a cell state

Backpropagation through time with partially uninterrupted gradient flow

Use gates to control the flow of information

Forget Gate gets rid of irrelevant information

Store relevant information from current input

Selectively update cell state

Output gate returns a filtered version of the cell state

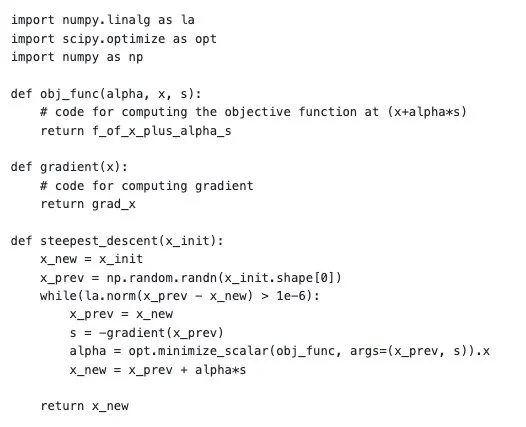

Gradient Descent

The negative of the gradient of a differentiable function points downhill i.e. towards points in the domain having lower values. This hints us to move in the direction of it while searching for the minimum until we reach the point where gradient is zero.

The reason Gradient Descent is effective is that it set the step size to move from the initial guess to the optimal result. When the initial guess is far away from the optimal, the step size would be bigger. When the result is closed to the optimal, the step size would be smaller. Therefore, in the code, we could use the magnitude of step size to decide whether we should stop.

In Neural Network, this method is used to find the optimal weights and biases, leading to smallest loss function(Residual Sum of Square)